Week 8 - Visualizing Sound

Pitchforks, Musical Instrument, LED Light Source (Projector)

Computers, Humans, Physics

- Computers are physical machines.

- A modern computer CPU is composed of 1,000,000,000+ transistors

- Conductive materials allow electrically charged particals to flow

- Those particles can be pushed along by magnets (among other things)

- Those particles push into other particles, pushing them along.

- The pushing propagates very quickly, though the particles may progress slowly. In fact the particles may just move a little bit back and forth.

- This all works backwards too, the moving charged particles can push back on magnets.

- Motor to Motor

- Motor to Light

- Motor to Speaker

Electricity Misconceptions Spread by K-6 Textbooks

Light

The World -> EM Waves -> Eye -> Visual Processing -> Mind

Richard Feynman talks about light

The Electromagnetic Spectrum

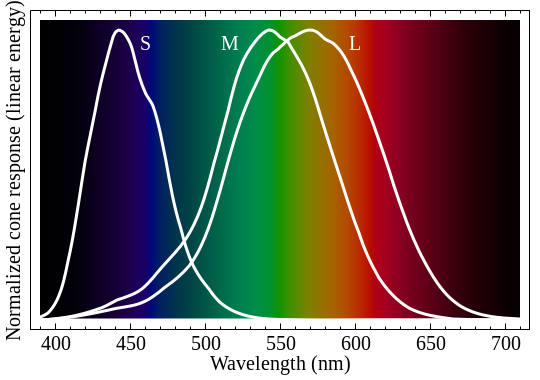

| Cone type | Name | Range | Peak wavelength | Colors |

|---|---|---|---|---|

| S Short | β | 400–500 nm | 420–440 nm | Blue |

| M Medium | γ | 450–630 nm | 534–555 nm | Green, Yellow |

| L Long | ρ | 500–700 nm | 564–580 nm | Green, Yellow, Red |

Wikipedia: Electromagnetic Spectrum

How Good Our Eyes Are

Our eyes have ~120 million rods that detect brightness and ~6.5 million cones that detect color.

Angular resolution: 1 arcminute or .02° Thats about 250 dpi at one foot away, 3 pixels/mm at 1 meter, something 6 inches wide about a mile away. We can actualy see things much smaller than this, when they are bright, we just can understand their shape. For example a bright led would be easily visible a mile away in a dark environment.

Field of View: ~ 160° x 175° But resolution is very center biased.

Able to perceive electromagnetic waves from 390 to 700nm, and can differentiate hues as close as 1-10 nm.

In the US more than 3% of of those 40 years and older are either legally blind (20/200 vision or worse, with corrective lenses) or visually impaired (20/40 or worse).

In the US more 7% of males and .4% of females have some form of color-blindness.

Wikipedia: Naked Eye Wikipedia: Color Vision CDC: The Burden of Vision Loss

Color

Emissive Color

Sunlight contains electromagnetic radian in many wavelengths. Sunlight

An LED provides electromagnetic radian in a very specific wavelength range.

An LED computer display has LEDs of three colors. It can vary the intensity of those three colors, but can’t provide electromagnetic radiation in the wavelengths between them.

We perceive the mix of the three colors as a single color.

Reflective Color

A reflective object doesn’t reflect color of single wavelength. Instead it reflects/absorbs all wavelengths at different amounts.

We perceive the reflections as a single color.

A reflective color cannot be brighter than the lighting in any wavelength.

We adjust our perceived color of an image based on our understanding of the lighting.

How Good our Visual Processing Is

- Depth Perception (Binocular and not)

- Estimation of “True” Color, or Color Under Different Lighting That Dress

- Pattern Recognition

- We can differentiate a solid light from a flickering one, up to 60+ hz.

Thoughts

Our understanding of color/color theory is informed from the anatomy of our eye and the way our mind processes vision.

We talk about what color something is a single thing: dark blue, pink, vivid green. We don’t think about the color of something as a little bit green, a little bit blue, and a lot red. We definitely don’t think of color as the sum of the many in between wavelengths.

Sound

What Sound Is

A wave of air pressure and displacement. Something in contact with air vibrates. As that thing pushes forward, it pushes the particles air in front of it forward into the the particles of air in front of them. Making an area of higher pressure. This high pressure area pushes out in all directions, and a wave of pressure begins to propagate though the air.

This pressure wave can push on other things like microphones and our ears. Our ears are able to detect very rapid and subtle changes in this pressure. And we are then able to understand the amplitude, frequency, and even shape of these changes. Because we have two ears, spaced a few inches apart, we can compare what each ear hears to gain spacial information as well.

How Good Our Ears Are

16,000-20,000 hairs in a curled up tube, the cochlea. The rods and cones in the eye perceive signals from different locations. The hairs in the cochlea are “tuned” to different frequencies.

Detect Pressure Changes < 1 billionth of the atmospheric pressure

We can hear sounds 10 trillion times louder than that, where they start hurting.

Detect Pitches/Frequencies from 20hz to 20,000hz, and can differentiate frequencies as close as 5 cents (.15hz at Middle C).

An 88 Key Piano ranges from A0 (27.5 hz) to C8 (4186.01 hz)

Detect Timbre

About 20% of Americans have some hearing loss.

Our Ears Make Mistakes Sensitivity of Human Ear

How Good Our Audio Processing Is

3D Location of Sound We have two sound sensors, and we can detect differences in the amplitude an timing of signal between them. We can turn our head to hear sounds from a different "view point". We can infer information from acoustic context including echoes and spectral attenuation.

Source Differentiation Listen to one person in noisy room, or to a specific instrument in a symphony.

Very, very good temporal pattern detection.

Easily noticed off key notes in a song.

Vision VS Hearing

- Our vision prioritizes spacial information over spectral information.

- Our hearing prioritizes spectral information over spacial informational.

- We are pretty good at telling where a sound originates, but much better at telling where a light originates.

- We can tell what color a light is emitting, but we can understand the spectrum of a sound better.

Light, Sound, And Virtual Reality

“To give you just one example of how much better visuals can get; in order for Crescent Bay to deliver the same pixel density as a monitor at a normal viewing distance, it would have to have a resolution of about 5K by 5K per eye, something like 20 times as many pixels as it currently has. In order for it to have retinal resolution at a field of view of 180 degrees, it would have to have something on the order of 16K by 16K resolution, roughly 200 times as many pixels.”

— Michael Abrash

Our audio recording and playback capabilities are much closer to saturating the sensitivity of our ears.

Even two perfect microphones, recordings, and speakers can do a pretty good job of fooling you into thinking a recording sound is a real sound. But a recording not enough on its own. We can move our heads, and we can use our understanding of space in interpreting sounds. For VR sound, the computer must process the sound to place sounds three-dimensional, acoustic space.

Lets Make Noise

A Wave Propagated On a Wave

- When we talk about sine waves, square waves, triangle waves we are not talking about the wave propagting the signal.

- Instead, we are talking about the shape of the signal. Air pressure over time at a specific place (like our ear). Also, the position of the speaker over time, and the voltage on the wire over time.

Additive Synthesis

All pitched tone shapes can be created by adding sine waves of different frequencies, phases, and amplitudes.

Fundamental and Harmonics/Overtones (whole number multiples of the fundamental)

Square wave, triangle wave, sawtooth wave

The tone of a guitar, or oboe, or triangle

By controlling the envolope of these tones we can create a huge range of sounds.

The Fourier transform

- Translates a function from the time domain to frequency domain.

- Determines how strongly each frequency contributes to the sound.

- Results in an array of frequency values as long as the input source.

- The first value coresponds to the wavelength of the input.

- The remaing values represent frequencies at integer multiples of the first.

- The algorithm for quickly performing a Fourier transform is called the Fast Forier Transform, or FFT

P5 + FFT

P5 FFT Reference Class Example Code